Edge networking is a sophisticated and dynamic computing architecture aimed at bringing cloud services closer to the end-user, boosting responsiveness, and decreasing backhaul traffic. Edge networks' primary dynamic aspects include user mobility, preferences, and content popularity. Temporal and social characteristics of the material, such as the number of views and likes, are used to assess the worldwide popularity of content. Such estimations, however, are not always successful to be mapped to an edge network with specific social and geographic features. Machine learning techniques are employed in next-generation edge networks to forecast content popularity based on user preferences, cluster users based on comparable content interests, and improve cache placement and replacement tactics. Our work is to examine the use of machine learning techniques for edge network caching within the network itself.

Introduction

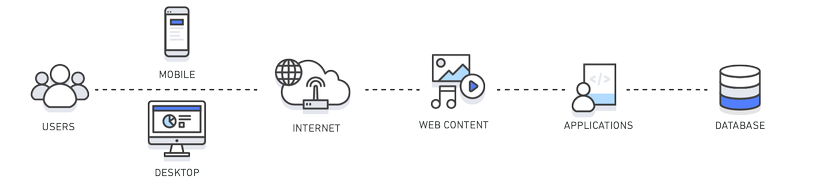

Cloud data centers and content delivery networks (CDN) provide back-end data storage that is required by mobile apps, which have low latency, mobility, energy efficiency, and high bandwidth. The latency between mobile users and geographically dispersed cloud data centers/CDNs is greatly influenced by distance. To deal with this problem, many networking technologies have been proposed and implemented to bring processing and caching capabilities closer to end-users. Mobile Edge Computing (MEC), fog computing, cloudlets, and information-centric networks are examples of these emerging technologies. Using in-network caching, edge networks have reduced the burden on backhaul networks while solving the problem of excessive content delay. Key research questions in the area of caching in mobile networks include the popularity of multimedia, base stations and mobile users, and proactive and reactive to cache. Although the questions are clear, the solution for caching still needs to be optimized more.

To answer the question above, machine learning in wireless networks is used to estimate future user requests based on time-series dynamic mobility, popularity, and preference information. Given the limitations of user data available in edge networks, ML models that learn without prior information are frequently used to optimize caching options.

Edge based Intelligence for Edge Caching

Edge caching refers to a user-centric cache on the edge that has limited storage capacity and stores material that is only relevant to edge users. Because of the edge's limited storage capacity and the dynamic features of the user (mobility, content access, etc.), the caching choice differs from CDN caching. In edge networks, Optimal Caching decision are based on a variety of inputs which corresponding tasks that need to be optimized by learning, including:

- Content popularity and content popularity prediction task.

- User mobility and user mobility prediction task.

- Wireless channel and joint content identification task.

Although mentioned tasks can also output caching decisions, we further leverage Deep Heuristic to anticipate the mentioned input characteristics to optimum caching issues through Edge-based Intelligence for Edge Caching.

A high-level conceptual framework of ML-based edge caching is depicted in Figure 2. Social awareness, mobility patterns, and user preferences are fed into an ML framework, which may be federated across multiple nodes and stored at dispersed MEC servers, F-RAN, or CDN. The social networks, D2D communications, and V2I communications are used to provide the inputs for the ML-based caching decision framework. Intelligent and optimum decisions are sent back to caches handled by network virtualization methods. Caching placement strategies are being innovated with the aid of UAV-mounted caches that could also be directed towards user populations and real-time events. For more details, let examine three mentioned optimum tasks in the Smart Edge Caching System that play an important role in giving out caching decisions.

1. Content Popularity Prediction for Caching.

In our system, we use the cloud-based architecture (master node) which leverages supervised and deep learning in two phases to estimate video popularity. Then, the system automatically pushes the learned cache decisions to the BSs (slave nodes). To begin, a data collector module gathers information on the number of video IDs that have been requested to make predictions about the future popularity and class of videos based on supervised learning. Deep learning algorithms are used to estimate future video content request numbers. See Figure 3 and Figure 4 for more details about the system model and the architecture of the master node.

The Algorithm below conducts the training processes for the estimation model at the cloud data center in order to improve the prediction accuracy. This method takes as inputs the best model and a subset of raw data . This method produces an optimal trained model for predicting popularity scores. First, the accuracy measurement metrics , , and are set to zero. The learning rate lm and the regularization rate are then set to fixed values of .

Alg. 2 depicts the caching procedure at the BS in order to optimize cache hit. This algorithm's inputs include user requests, BS log files, and learned models from the cloud data center, which are utilized to forecast popularity scores. This algorithm's result is a decision on whether to save the predicted popular content.

2. Mobility Prediction for Caching.

Caching for mobile users is important since wearable high-tech devices are more and more popular. Thus, mobility prediction is essential for optimizing the cache hit of mobile devices. Here, we utilize Temporal Dependency, Spatial Dependency, and Geographic Restriction to forecast user locations in mobile networks.

The mobility models depict the movement of mobile nodes as well as the changes in position, velocity, and acceleration over time. Based on the Temporal Dependency mobility model, prediction systems presume that mobile node trajectories may be restricted by physical properties such as acceleration, velocity, direction, and movement history. The estimation is based on the premise that mobile nodes tend to travel in a correlated way and that the mobility pattern of one node is influenced by the mobility pattern of other adjacent nodes.

For Geographic Restriction, node trajectories are affected by the environment, and mobile node mobility is restricted by geographic constraints. Similarly, buildings and other barriers may obstruct pedestrians. To address this issue, we employ a completely cloudified mobility prediction service that enables on-demand mobility prediction life-cycle management. The mobility prediction method is based on the Dynamic Bayesian Network (DBN) model, and the rationale for adopting DBN is that the next place visited by a user is determined by its present position, (ii) the movement duration, and (iii) the day that user is in motion. Figure 5 illustrates how to relocate content for mobile users. The output relocation contents are considered to access cache hit.

3.Joint Content Identification for Caching.

Optimization for the joint content caching and mode selection for slice instances is essential in fog radio access networks (F-RANs). A fog-computing layer is established at the network's edge in F-RANs, and a portion of the service needs may be met locally without engaging with the cloud computing center via the fronthaul connections. Hotspot and vehicle-to-infrastructure situations, in particular, are addressed, and related network slice instances are coordinated in F-RANs. When various users' expectations and restricted resources are taken into account, there is a substantial high complexity in addressing the initial optimization issue using standard optimization techniques. We adopt a deep reinforcement learning-based method because of the benefits of deep reinforcement learning in tackling complicated network optimizations. In this case, the cloud server uses intelligent operations to enhance the hit ratio and total transmission rate.

As shown in Figure 6, we describe a system model of the F-RAN RAN's slice instances. Customized RAN slice instances are deployed to enable hotspot UEs and V2I UEs. Set to represent hotspot UEs and to represent V2I UEs. High rates are required by the hotspot UEs in the hotspot slice instance. As a result, remote radio units (RRUs) are spread across UEs and linked to the cloud server through fronthaul. F-APs with popular content stored are situated in specific zones to reduce the strain on fronthaul. F-APs communicate with the cloud server through the interface. In the V2I slice instance, the F-AP mode and RRU mode are also accessible when V2I UEs require delay assured data transfer.

Here, we have employed a deep reinforcement learning (DRL)-based approach, in which a DQN is built using historical data (visit Algorithm below for the process of DRL).